In my previous post, I explained the theory behind the Publish on your Own Site, Syndicate Elsewhere (POSSE) script I’ve been working on for this blog. POSSE is a strategy for managing and distributing your writing. Since this Hugo site is my home base on the web, adding an automated syndication script would be a fun and powerful project.

Here’s a public repository for the script on Github: hugo-posse

It’s available under the MIT license, and free for anyone to use or modify. The plan for this post is to talk about how I use it with my site, and what’s next for improving this site.

Setup and configuration

For this site, posse.py lives in the top level of the project directory along with some other helper Python scripts. This is the easiest way for me to call them, whether they help backup images or build and deploy the site. The README includes detailed installation instructions.

The script relies on several libraries, most notably atproto and mastodon.py, so these are included in a requirements.txt file. You can use pip and this file to quickly install these libraries.

To securely store your credentials, I’ve opted to include a .env file in the root of my Hugo site project. My site configuration is stored in Github, which means it isn’t suitable for holding keys or passwords. I instruct Git to ignore this file (in my .gitignore). If you plan on using this script, I highly recommend doing the same.

The most complicated part of setting this up is getting credentials from Bluesky and Mastodon, but this is well documented in the scripts README file.

Testing

Honestly, coding with Gemini as an assistant made this go very quickly. I have some Python knowledge, so basically this was “centaur-like” development, where I had a very effective intern pushing this project to completion. With a bit of the planning in place, I was able to use Gemini to come up with strategies for handling TOML/YAML pretty easily along with loops and structures to handle leaf bundles vs. individual files in the parsing and execution logic.

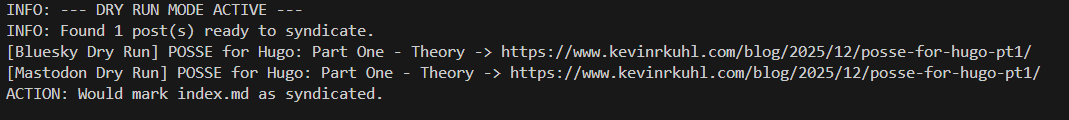

I wanted to include the ability to do a dry-run before actual runs, which is flagged through the --dry-run argument.

The one unexpected element was a compatibility conflict between atproto and Pydantic. When I ran tests I’d get a blob of warning text:

...\pydantic\_internal\_generate_schema.py:2249: UnsupportedFieldAttributeWarning: The 'default' attribute with value None was provided to the `Field()` function, which has no effect in the context it was used. 'default' is field-specific metadata, and can only be attached to a model field using `Annotated` metadata or by assignment. This may have happened because an `Annotated` type alias using the `type` statement was used, or if the `Field()` function was attached to a single member of a union type.

warnings.warn(

This warning doesn’t have any effect on the finished script, so I’ve decided to suppress this in the import block for script. This is a bit of technical debt, which I’ll keep in a note in Obsidian for maintenance for this project.

Also, in order for this to work, you have to have strong markdown file hygiene. An extra hard return at the start of the file can result in the parser skipping the file. But the way it’s structured is that it detects front matter at line one of the site. Better parsing is also in the list of to-dos for this project.

The workflow

Ok! So the workflow is pretty simple. The goal is to make syndication feel like writing, not like managing or repeatedly copy and pasting everywhere.Here’s how this plays out in writing:

When I draft my Hugo content, I can decide whether I want to syndicate the post. If I do, I add two standard elements to the front matter for that post: syndicate_to (a list of strings for syndication targets) and microblog_content (the post for Bluesky/Mastodon). If I don’t want to syndicate the content, I don’t. In the microblog_content field, I add a summary of what I say in the blog post and a couple of hashtags.

There’s nothing that ties me to writing inside of the social media apps, which lets me stay focused on finishing a quality blog post, without the noise and distraction of social media.

When I’m ready to publish, I go through my build/deploy process. I’ve decided to automate that, but for manually building and deploying my Hugo blog, it looks like this:

rm -r -fo public

hugo build

gsutil -m rsync -r -n public gs://example-project

Then I wait a bit for the changes to hit my site, followed by running:

python posse.py content/blog --dry-run

Here’s the result of doing a dry run with a single post ready to syndicate:

This lets me preview the manifest and actions that the script would perform. If I’m happy, I run:

python posse.py content/blog

And here’s the successful feedback:

Since there’s a check on the syndicated: true boolean flag first, I don’t have to worry about posting duplicate content. After syndicating a post, the script will automatically add syndicated: true to the post. Between the --dry-run argument and the syndicated: true check, I really tried to prioritize security over spamming my social media feeds.

Minding the gap

There’s one problem I have with this approach and my stack of Hugo > Google Cloud Services Bucket > Cloudflare (for DNS/HTTPS/caching). I alluded to it in my first post: if I run posse.py too soon, the posts won’t be live, and the script aborts syndication. This is a condition where two processes are in a race to complete.

I came up with three solutions for handling this race condition:

- Manually wait before executing the script.

- Add this to a build/deploy script and use

sleep 30command to try and let my deployment finish. - Write a small loop to poll the URL on small intervals until I can syndicate.

I talked about manually working through the commands in the previous section, but here’s how the build/deploy script and sleep would work as a bash script:

# 1. Clean and build

rm -rf public

hugo --minify

# 2. Sync to GCS

gsutil -m rsync -r -d public gs://example-project

# 3. The pause

echo "Waiting 30 seconds for Cloudflare propagation..."

sleep 30

# 4. Attempt to syndicate

python posse.py content/blog

I’m toying around with a Python script handling build and deploy operations (apologies if I’ve accidentally doubled posts in your RSS reader). The thought is it can pull together some of my other utilities written in Python (like a script which backs up screenshots, since I don’t store them in Github) into a build, deploy, syndicate, and clean-up process.

Here’s how a polling loop would look in Python:

#Other libraries

import time

import requests

#Other methods

def check_url_with_retry(url, max_retries=5, delay=10):

for attempt in range(max_retries):

try:

response = requests.get(url, timeout=5)

if response.status_code == 200:

return True

print(f"URL not ready ({response.status_code}). Retrying in {delay}s...")

except requests.RequestException:

print(f"Connection error. Retrying in {delay}s...")

time.sleep(delay)

return False

# Main execution loop:

if not check_url_with_retry(url):

logging.error(f"Skipping {title}: URL not accessible after retries.")

continue

Future directions

As I’ve gone through this, I’ve been keeping note of various improvements that I can make for the script:

- My first test turned up something I missed during my Bluesky research, namely how Bluesky handles hashtags. Bluesky requires hashtags to be parsed and added as metadata when creating a post. On Mastodon, hashtags are plain text. Adding a separate method to parse and extract hashtags for Bluesky is a high-priority fix for now.

- Better handling for parsing front matter (cf. the issue with a hard return before the front matter).

- Writing links to Mastodon and Bluesky back into the front matter for the post.

- Hugo is one SSG among others, I’m not sure about compatibility. But it might be fun to modify or extend this script to support other blogging platforms. The biggest barrier is that I’ve hardcoded the

contentfolder structure into this script. It might not be too big of a lift to make that an argument for the script, but I’ve got some ideas to work around this. - Posts without linking back to this site — This gets me really close to using this site as a headless content management system for my social media. The idea is to add a folder to my Hugo project where I can write a short post, and syndicate that to the microblogging services, without publishing the post to the website and linking back to it. This Hugo site becomes my authoring environment for social media posts.

- Obsidian is a note taking app that I enjoy using. It has a similar structure to Hugo (file directory of markdown files with front matter) If I support posts without linking back to this site, this is a very cool extension of the idea outside of blogging and into note-taking. You could use Obsidian as a weird headless CMS for social media.

- Expanding to other social media platforms. If I’m really all about this site as a headless CMS for all my professional social media, then LinkedIn is a natural next step. So is Twitter/X.

While I want to get back on my writing, I’ll probably work through these issues and continue to blog about developing and improving this script. Maybe I’ll even have to rename the repository if I go far enough down the “SSG agnostic” route.

Conclusion

This has been a surprisingly fun rabbit hole to go down.

It combines practical coding with trying to find a better way to use the internet, built around my needs for writing and focus. If you’re feeling like you don’t have a lot of agency when it comes to your publishing pipeline, I hope this inspires you to try and build something similar. I can retain my focus while writing and easily share my content with more people (RSS, Bluesky, and Mastodon are all ways to follow this blog). While I don’t think I’ll syndicate shorter link blog posts, I’m excited to share more of my original pieces.

I’ve been balancing working on this with several ideas for long-form content for this blog. But I really wanted to get all this working before I started making those posts. There was a bit of back and forth as I got more into those ideas. But I’m glad this is in a usable and sharable state.

Expect a flurry of content before the end of the year. I’ve got some really interesting ideas about AI and automation for technical writers simmering on the back burner. After I clear that backlog, I’ll probably gravitate back towards a balance of link blogging content, long form posts, and technical projects that feels sustainable and aligns with my mood. If you’re reading this on social media, let me know what you’d be most interested in.

But I’m enjoying what this site is becoming and looking forward to continuing to blog and build it outwards.